At Emphie Solutions, chess is in the DNA of the company. That’s because we build and operate chessgrow.com, the online platform for teaching chess for our sister company, Chess Grow.

When the pandemic hit, Chess Grow had to rapidly build a remote learning solution - Chess Grow ClassRoom. In this article, we will tell you about the key technical element that enabled this development, our own WebRTC-based video chat component.

The article will focus on high-level solution architecture considerations and decisions. We will not provide a development tutorial or code walkthroughs, but we will reference plenty of online materials if you need them to get started on your own WebRTC project.

Web-based video chat - buy or build?

When we sat down to figure out how to integrate a video chat into the Chess Grow web platform, this clearly was a challenging task that didn’t happen every day. We had to start by researching implementation options. As you might expect, we found that we could either:

- Use ready-made commercial components that can be embedded right away.

- Learn how to build a video-chat component ourselves.

Each of those options came with its own pros and cons:

| Pros | Cons | |

| Proprietary software |

|

|

| In-house implementation |

|

|

After careful consideration, we decided to have a go at an attempt to develop an in-house component, keeping in mind that we could always fall back to a proprietary solution.

Getting started with WebRTC

As a starting point for our implementation, we chose the WebRTC standard which enables Real-Time Communication between browsers. The standard has been around for a while and enjoys broad support in desktop and mobile browsers.

WebRTC enables the use of the Real-time Transport Protocol incompatible web browsers. This is immensely helpful, as it covers:

- Connectivity – real-time connections between peers can be established without the need for machine addresses. This is achieved using Interactive Connectivity Establishment (ICE).

- Browser integration – audio and video streams are captured and sent to other peers by the browser. Received streams can be embedded into a web page using a simple <video> tag.

- Security – all WebRTC traffic is encrypted. Exchange of encryption keys and other steps necessary to establish a secure connection are also handled by the browser.

From a developers’ perspective, the best places we found where you can get familiar with the WebRTC API are the Mozilla developer docs and the Google developers info page. The official W3C papers are also helpful.

The WebRTC API itself is rather complex, but luckily there are third-party libraries available that make it easier to use. For our project, we decided to use PeerJs which helped us greatly reduce the time needed to build a working prototype.

Establishing direct connections, indirectly

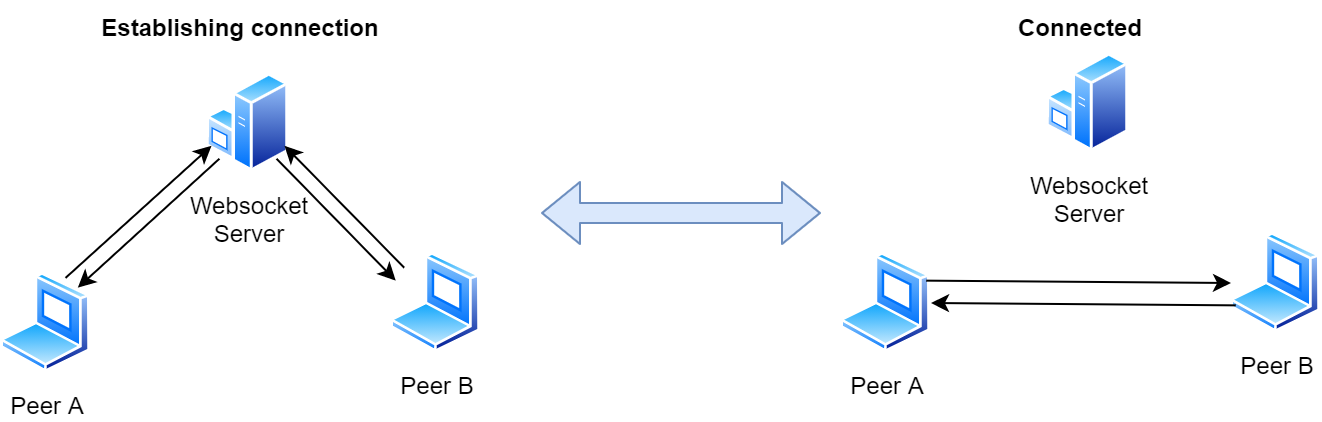

WebRTC peers can establish connections without knowing each other’s machine addresses. To do this they employ the ICE technique and exchange messages indirectly before they actually connect. Those messages contain ICE candidates, so all the data necessary to open a direct TCP or UDP connection, including IP addresses, available ports, protocols, encryption, and so on. The WebRTC standard does not define how this exchange of data should be performed, but typically it is implemented using Websockets.

As you see above, the Websocket server facilitates the connection - and it is only used for this purpose. Once the peers are connected, the server is no longer involved in their communication.

The only situation when the Websocket server comes back into play is when the peer-to-peer connection is lost and needs to be established again. This can happen for example when one of the peers switches internet connection from cellular to Wi-Fi. In such a scenario, both browsers will try to re-establish the connection with help from the Websocket server.

In our case, providing the Websocket server did not require much effort, as we already had certain features on our platform implemented using Websockets. We extended our protocol with messages required by PeerJS and we were done.

For those of you who don’t already have a WebSocket server in place, the developer of PeerJs is also working on a new project - PeerJs Server - which, as you likely guessed, is a WebSocket server implementation that supports PeerJs out of the box.

The (infamous) P2P networks

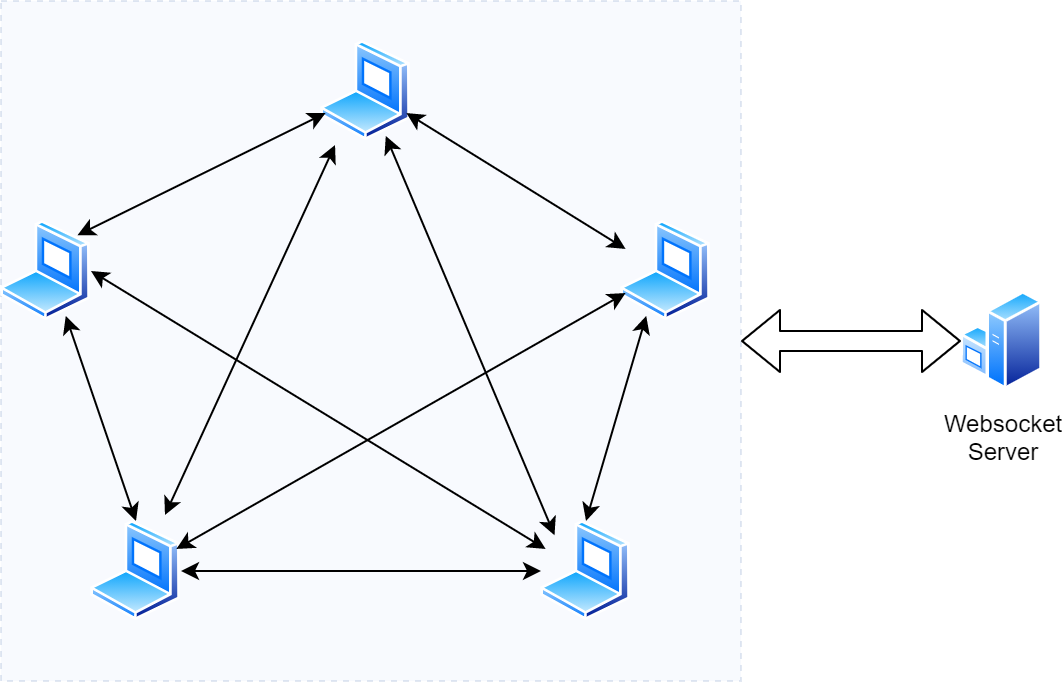

An important property of WebRTC connectivity is that it supports peer-to-peer (P2P) connections. This means that the audio and video communication between two browsers is direct and does not pass through intermediate servers.

You may expect that this can bring many benefits:

- direct connections reduce lag;

- costs of hosting are reduced, as there are no additional servers;

- connections are safe, as the data doesn’t go through external servers.

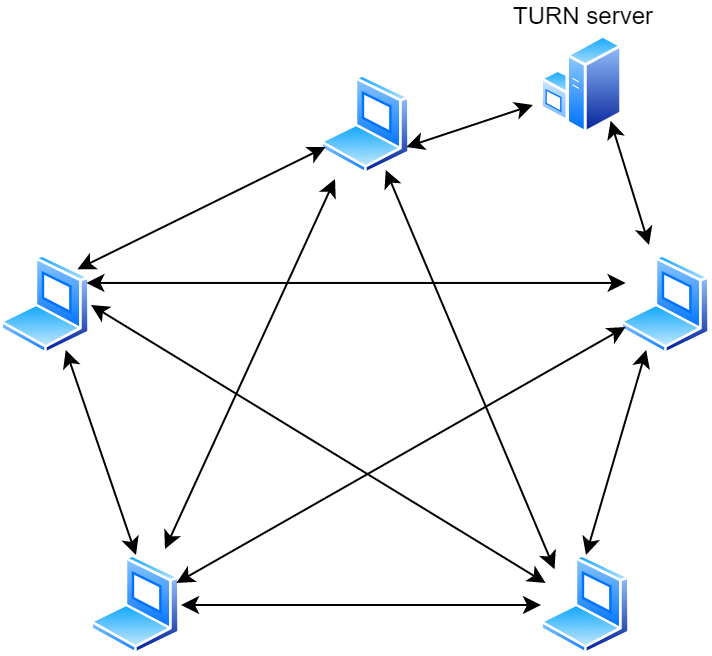

As you’ll read on, you’ll see that in real-world situations, those benefits may not be available at all times. For the moment, however, let’s just say that when all goes well, after a short while all connections are established and a fully connected peer-to-peer network emerges.

Every pair of peers maintains their own connection. Whenever any of the peers detects that a connection is lost, it notifies the corresponding peer via Websocket server and attempts to re-connect.

Teething problems

When we started testing, we discovered that this solution worked great - in a local environment (via localhost). The connections failed when we tried to use our on-premise server. After investigating our web browser logs, we quickly determined that we need to do two more things to really go online:

- Connect over HTTPS - all modern browsers will only allow for the use of input devices (the camera and the microphone) on sites that use encryption

- Set up a STUN server

HTTPS was straightforward, we only needed to change our test environment settings. The second issue turned out to be much more interesting.

Simple Traversal of User Datagram Protocol through Network Address Translators

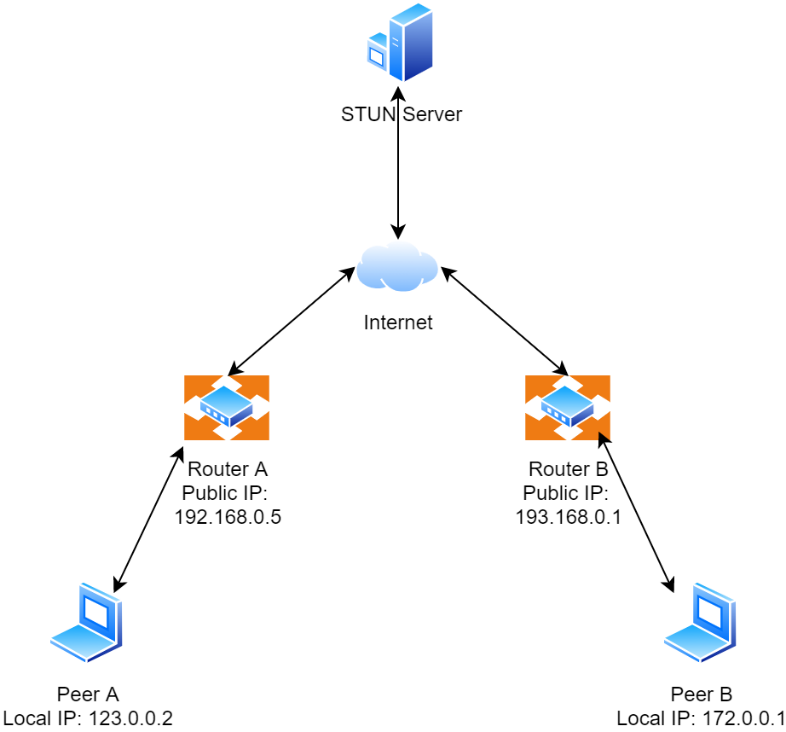

...which is what STUN used to stand for before its name was sadly shortened, is the answer to the common real-world scenario in which peers connect through different NATs. When this happens, each of the peers will only know its local IP address and only be accessible by computers and devices in the same local network (i.e. connected to the same router). To establish connections between peers beyond their local networks, we need to make it possible for them to determine their public network addresses.

Enabling this is the main purpose of STUN servers. Whenever the server receives a connection from a peer machine, it also gets the address of the last router that was involved in the connection. This address is exactly the public IP address that needs to be used to connect with the peer from external networks. All the STUN server has to do is provide this address back to the connecting peer.

The diagram below illustrates a network configuration in which a STUN server is used to facilitate peer-to-peer connections. Both of the peers obtain their public addresses, then exchange them and establish a direct connection.

The process of obtaining a public address does not involve any sensitive information, so it’s ok to use public STUN servers - and there are plenty of them available on the public internet. Since the process is fast, there’s also no need to worry that the public servers will get overloaded and become unresponsive.

Of course, if you’d rather avoid being dependent on external public servers it’s wise to host one yourself. We will discuss this when we will be talking about TURN servers.

For our environment, after we configured HTTPS encryption and a STUN server we were able to establish connections between peers, using our own hosted solution.

More teething problems

As we were testing connections, we discovered that some of our peers were unable to receive or send video and audio streams. For some unknown reason, certain participants simply couldn’t establish connections with others.

To find out why we isolated a pair of peers that were unable to communicate. We verified that both were able to exchange information via the WebSocket server and that both of them were starting the process of establishing the WebRTC connection. We also made sure that both of them used their public addresses - so the STUN server was working correctly.

In case you’re wondering, the tools needed to debug such connections are built into major browsers:

- In Chrome - chrome://webrtc-internals/

- In Firefox - about:webrtc

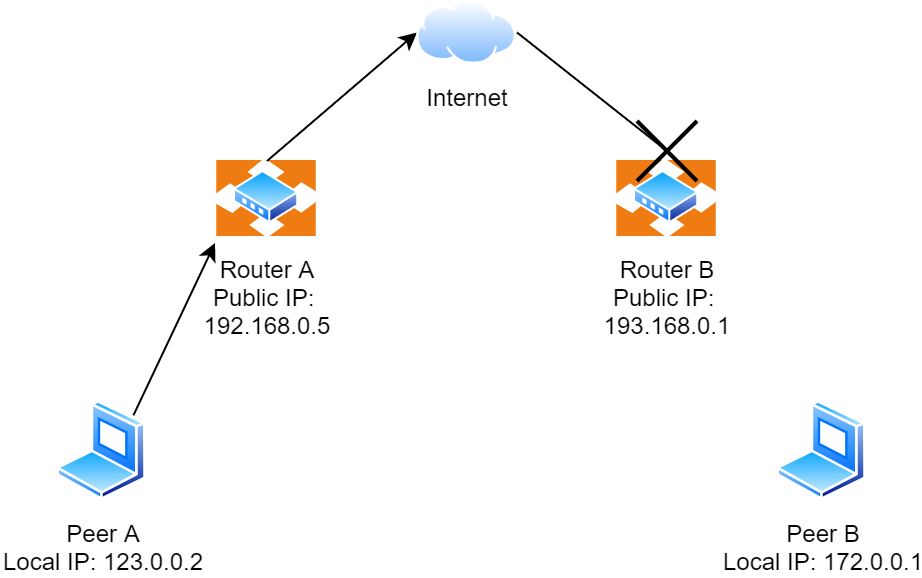

What we found out, in the end, is that in some cases establishing a P2P connection between two peers is simply not possible. The reason for this is network architecture.

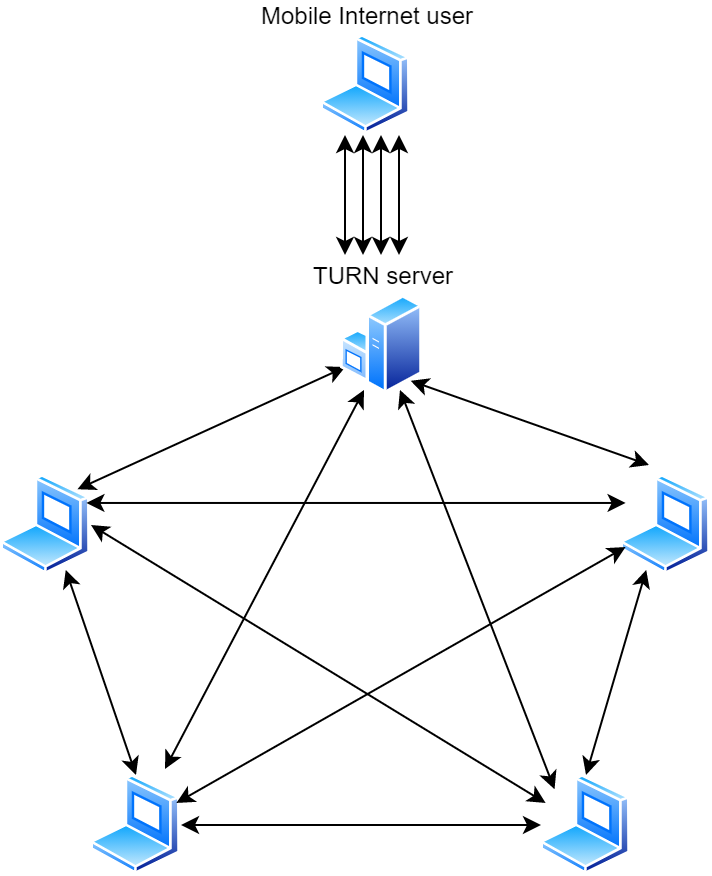

The diagram above illustrates the issue. When Peer A tries to connect to Peer B using its public address, Router B may simply block the connection. There may be different reasons for this. Router B might not support Port forwarding or be intentionally configured to block peer-to-peer connections by a network administrator concerned about the use of illicit P2P networks.

This may sound grim, but where there's a need there’s a workaround. When establishing direct connections is impossible, the ICE assumes that peers should fall back to using a relay, also known as a TURN server.

Traversal Using Relays around Network Address Translators

...or TURN, receives a well deserved second place in the category of this article’s longest tech names.

The main role of a TURN server is to accept connections from peers that are unable to connect directly and relay their message streams. Similarly to the STUN server, a TURN server must be accessible to all of the peers that may need to use it, so it must have a public internet address.

Implementing a TURN server is far from trivial and would take a lot of effort, so we decided to reach for an open-source implementation used in many commercial solutions, called Coturn. The great thing about Coturn is that it also supports the STUN protocol, which means that by deploying Coturn we get both a STUN and a TURN server.

To help deploy our Coturn setup, we decided to build our own Docker image. If you ever decide to do the same, remember to enable host networking in Docker. The amount of traffic generated by the TURN protocol is too much to handle for Docker’s default Bridged networking.

Real-world evaluation

After further testing, we decided our video chat implementation was good enough to be used in real chess classes. The final necessary element was to distribute chat room names that our participants had to know in order to join our class chat rooms. Since our students are mostly young children, our teachers usually provide the chat room names to the parents using SMS, Whatsapp, or any of the other available messaging channels.

The process of joining classes consists of the following steps:

- The user enters our welcome page and fills in his name and the room number.

- The user’s browser connects to our web server to get a list of chat participants.

- For each of the participants, the browser initializes the process of establishing a connection (as described in the previous section)

To our great joy, the solution worked as expected!

Naturally, we had to solve a couple of minor bugs - like the one when a student couldn't join classes because his computer did not have a webcam - but after a few rounds of feedback and bug fixing, we arrived at a stable solution.

Key takeaway: purely peer-to-peer video chat is not feasible

We set out to implement our video chat using nothing but peer-to-peer connections, but due to the realities of network connectivity, we couldn’t get a fully working solution without a TURN server.

As illustrated above, this meant a change to the architecture but didn’t entirely eliminate the P2P nature of our solution. Most connections remain peer-to-peer. Relaying data through the TURN server only happens when absolutely necessary. Also because a relayed connection may introduce delays.

In the end, a relayed connection is better than no connection and in our experiments delays introduced by the TURN server were not noticeable. That’s also because we hosted our TURN server in a location as close as possible to reduce round trips.

What about privacy and security? The TURN server is a relay, but it doesn’t interact with the data that it passes along. Even if an attacker wanted to intercept the communication, the data the peers are sending is encrypted and the encryption keys are exchanged using the separate WebSocket server when the peers establish a connection. Without the keys, there’s no way to decrypt the passing data at the TURN server. So in the end, neither security nor privacy is compromised.

One of our key practical observations is that mobile networks greatly increase the number of relayed connections. Peers connecting using mobile networks are the ones that are most likely to be forced to use TURN servers. As a result, the remaining participants also have to switch to the relay when connecting with mobile peers.

Bandwidth requirements

With the technical challenges solved, what was left was to find a cost-effective way to operate our video chat. One of the key cost factors will be bandwidth, so we started by estimating bandwidth requirements.

The requirements will result from the number of connections that our solution will need to handle. How many connections do we need to expect? Let’s start with a baseline.

With P participants sending data directly to all others in a fully connected peer-to-peer network, the total number of connections is:

S = P * (P - 1) / 2

In this ideal case, all connections are direct and we will not have to cover their bandwidth costs.

Everything changes, though, as the number of participants that need to connect through the TURN server increases. In fact, the main cost factor of a WebRTC solution is the TURN server bandwidth, which is a direct consequence of the amount of data generated by the relayed video calls.

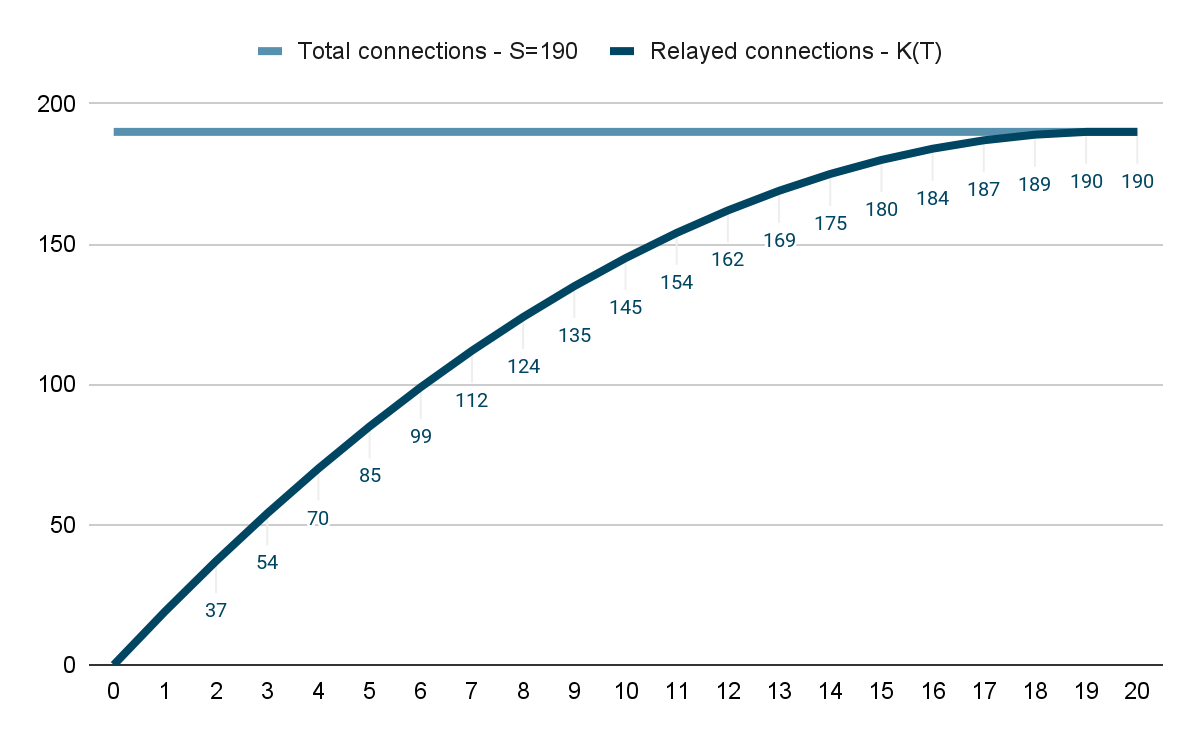

When T participants need to connect via the TURN server, typically all of their connections get relayed (as the reason is a network that blocks peer-to-peer traffic). In such case the number of connections going through the relay is:

K = T * ( P - (T + 1) / 2 )

What this means in practice is that the TURN server traffic increases rapidly with each participant that connects via the relay. The following graph illustrates what happens when, for example, 20 participants (P = 20) increasingly switch from peer-to-peer to relayed connections.

The TURN server traffic doesn’t go up in a linear fashion. As you’ll notice, it’s enough that 6 out of 20 participants switch to the relay and the TURN server will already have to handle 50% of all connections. When 10 participants switch to the relay, 75% connections will need to go through the TURN server.

You may find statistics online which claim that around 30% of video conference participants are typically forced to connect through a relay. In reality, however, we have to be prepared for the worst case scenario – that almost all of the participants will be using TURN servers.

We thus assume that our server and network needs to have all the bandwidth necessary to handle P participants (so T = P). As each of our connections is bi-directional (video streams are transmitted in both directions) we can also assume that upload and download traffic will be symmetric. Taking this into consideration, the final formula is:

U = D = P * (P - 1) / 2 * B

Where:

U – is the total upload bandwidth

D – is the total download bandwidth

B – s the bandwidth required by a video stream going in one direction

The video stream bandwidth B depends on many factors:

- compression codec used – WebRTC supports VP8 and AVC/H.264 for video, and Opus and G.711 PCM for audio (link).

- resolution and frame rate

- content – video with a lot of detail and movement will require much more bandwidth than e.g. a static shot of a plain coloured wall.

- network performance – WebRTC adapts video quality to the network latency.

Estimating B is thus not a trivial task, and we didn’t attempt to do it on our own. On the basis of available research, we assumed that we will need around 1 to 1.5 Mbps per video stream.

If you would like to check what video bandwidth would work well for your product, play with this WebRTC sample page, which shows a video quality preview for any chosen bandwidth.

Costs

So far, we talked about the following components required to build WebRTC video chat solution:

- Signaling server – our Websocket server

- Web server – hosting the application itself

- TURN server – our Coturn instance

- STUN server – the same Coturn instance

Costs for components 1 and 2 can be estimated like for any web development project, so we won’t go into detail here. The STUN server that never gets heavily loaded is also a minor cost factor. The primary cost factor for our video chat will be the traffic running through the TURN server.

There are two considerations that we need to take into account here:

- Bandwidth – upload and download (Incoming and Outgoing traffic) as estimated above;

- Transfer limits – hosting services often limit the amount of data transferred to and from a server.

Assuming for example that our solution will be used 4 times a month for 1 hour by 5 participants, we should expect:

- Upload and download bandwidth needs to be at least:

U = D = 5 * 4 / 2 * 1 Mbps = 10 Mbps

- Each meeting will use bi-directional video for an hour:

(10 Mbps + 10 Mbps) * 3600 s = 72000 Mb (Megabits) = 9000 MB (Megabytes)

Since we plan to have 4 meetings in a month, we will transfer a total of 36000 MB of transfer through both upload and download.

In our case, we decided to host our Coturn on Linode, which at the time we created our app was best suited to our needs. $10 a month got us a single core virtual machine with network bandwidth of 2000 Mbps and a transfer limit of 2 TB. The bandwidth should be sufficient for 10 parallel meetings for 5 participants and 2 TB of transfer should cover a total of over 220 meeting hours.

Summary

The work we did to integrate video conferencing into our product helped us launch new services that saved our business at a critical point in time. Remote classes will stay with us long after lockdowns become a thing of the past.

What we consider our key achievement is how quickly we were able to go from zero to a working in-house video chat solution. Despite the fact that we have never created one before, we were able to research and develop a working prototype in 2 weeks.

While we’re not yet ready to consider ourselves experts in the field of video conferencing solutions, we sure feel like we have a solid foundation to become ones! ☺

Stay tuned for a follow up article where we will discuss our real world results and further improvements to our product.